The Journal of Clinical and Preventive Cardiology has moved to a new website. You are currently visiting the old

website of the journal. To access the latest content, please visit www.jcpconline.org.

Basic Research for Clinicians

Systematic Reviews: What They Are, Why They Are Important, and How to Get Involved

Volume 1, Oct 2012

Heather MacKenzie, PhD., Ann Dewey, PhD., Amy Drahota, PhD., Sally Kilburn, PhD., Paul R Kalra, MA, FRCP, MD, Carole Fogg, MSc., Donah Zachariah, MBBS, MRCP, Portsmouth, Hampshire, UK

J Clin Prev Cardiol 2012;1(4):193-202

What are systematic reviews?

A systematic review can be defined as “a review of a clearly formulated question that uses systematic and explicit methods to identify, select and critically appraise relevant research, and to collect and analyze data from the studies that are included in the review. Statistical methods (meta-analysis) may or may not be used to analyze and summarize the results of the included studies.” http://www.cochrane.org/glossary

Health care providers are constantly faced with the dilemma of achieving the 6R’s – the right person (a suitably qualified professional), doing the right thing (evidence-based practice), in the right way (skills and competence), at the right time (providing treatments/services when the patient needs them), in the right place (location of treatments/services), with the right result (maximizing health gain) (1). The medical literature on which to base decisions is rapidly growing, but the reliability and relevance of the available research evidence varies in quality and application across different clinical scenarios. Health care providers need to be able to weigh the strengths and weakness of such evidence and summarize the findings to make an informed decision. For busy clinicians and health care providers, thank goodness for the systematic review! Systematic reviews attempt to summarize all past research to address a specific clinical question (or questions) using a systematic approach with methods that have been preplanned and documented in a systematic review protocol (2, 3). The types of questions systematic reviews aim to answer can vary significantly and the diverse nature of the available evidence demands the use of appropriate methodology to describe and synthesize these different types of evidence. These approaches include a comprehensive search of all potentially relevant articles and the use of explicit, reproducible criteria in the selection of articles for a systematic review. Primary research designs and study characteristics are appraised, data synthesized and results interpreted (4). Statistical analysis (or meta-analysis) may or may not be used to analyze the results of the included studies.

.jpg)

.jpg)

On completion of the systematic review, the methods used are documented in the review report, similar to that set out in primary research. In this way, readers of the systematic review can see for themselves the steps taken to judge both the quality and reproducibility of the systematic review methods. Systematic reviews can therefore provide the clinician with high-quality and timely research evidence to provide an answer to a focused clinical question (or questions). Evidence thus becomes more accessible to not only health care providers but also their users, that is, patients and their families.

A recent development is the emergence of Overviews of Reviews (OoRs). Systematic reviews have a necessarily narrow focus (e.g., “hypothermia to reduce neurological damage following coronary artery bypass surgery,” Ref. 5); however it may be more informative for clinicians to be able to access a summary of evidence from a range of related systematic reviews. This is the aim of an OoR. Using the above example, this systematic review might be included in a broader OoR which examines a range of interventions to reduce neurological damage following coronary artery bypass surgery (Figure 1). The methods of an OSR are similar to those of a systematic review with the exception that where systematic reviews focus on primary research studies, OoRs evaluate and combine information from systematic reviews.

History of systematic reviews

The emergence of the first systematic review is unknown, but can usually be attributed to one man – Professor Archie Cochrane (a Scottish epidemiologist) whose seminal text “Effectiveness and Efficiency” published in 1972 drew attention to the lack of reliable evidence on which to base health care decision. Later when he wrote further text urging health practitioners to organize knowledge into a useable and reliable format and practice evidence-based medicine, others took up this challenge. In the late 1970s and 1980s, a group of health service researchers in Oxford began a program of systematic reviews on the effectiveness of health care interventions followed in 1992 by the establishment of The Cochrane Collaboration – an international, independent and nonprofit organization committed to the principles of managing healthcare knowledge by publishing and updating high-quality systematic reviews was established. Today, The Cochrane Collaboration maintains The Cochrane Database of Systematic Reviews (CDSR) on The Cochrane Library – a database of systematic reviews and protocols, and comprises some 53 review groups publishing protocols and systematic reviews on a variety of health-related topics, including The Cochrane Heart Group, Hypertension Group and Stroke Group. Other organizations with similar objectives have emerged, including the Australian-based Joanna Briggs Institute (covering best evidence for global health care information), The Campbell Collaboration (a sister organization to The Cochrane Collaboration) which provides systematic evidence for issues of broader public policy, and the EPPI centre database providing well-designed evaluations of interventions in the fields of education and social welfare. For more information on each of these organizations including access to resources and support offered, please refer to Table 2.

Conducting a Systematic Review

When should I consider getting involved in a systematic review, and what commitment and skills are required?

It may be that the answer to a clinical question you have cannot be found through an existing systematic review and hence you might consider embarking on your own review. There are many challenges and skills required for producing a systematic review. The skills required not only include having an understanding of the disease process, interventions, relevant outcomes and the patient experience; but also the ability to find and appraise all the relevant research, synthesize the findings using advanced statistical or qualitative techniques, and publish your findings. The processes of indexing retrieved studies, and extracting and managing the data can be daunting and some reviews will require specialist analytical software. Moreover, systematic reviews can be time-consuming and, depending upon the complexity of your question, typically take 1–2 years to complete. Perhaps for these reasons, many published systematic reviews are produced by teams working in collaboration with the support of a specialist systematic review organization (see Table 2) in order to achieve these many and varied tasks.

What resources are available for clinicians intending to carry out a systematic review?

There are several specialist systematic review organizations; so what should you look for when selecting an organization to work with? Many first time reviewers, whether they are independent researchers or undertaking the review as part of a PhD or Professional Doctorate, feel that they benefit from attending workshops and accessing online training resources offered by some review organizations. There are many possible approaches and techniques for a review so a review organization’s handbook and evidence-based guidance on the process could save you time. You will want the organization be up to date with good reporting practice, and to ensure that their guidance, handbooks and style guides help you to achieve these standards. As a researcher, you will probably want the opportunity to communicate and exchange ideas with people from a broad range of disciplines, and some review organizations provide seminars, conferences and online discussion rooms so creating a hub for a community. This kind of networking can be instrumental in acquiring the diverse skill mix required of coauthors to produce a well-researched and relevant review.

The perspectives of those involved in the care for people with cardiovascular disease can be different to those of other health care professionals. As such the organization you choose to work with, or your own team or reviewers, should have strong input from relevant groups with appropriate expertise that can provide you with peer review and team members in order to achieve your review. Finally, one of your major goals will be to publish your findings, so look for an organization that will help you publish and make your review accessible to the public and professionals all over the world – perhaps without expensive journal registration fees. As part of the publishing process, you should expect peer review, copy editor support and the database or journal to be rated as of international standard. A summary of what support a nonexhaustive selection of organizations can provide for your review is given in Table 2. More information on each can be found at

The perspectives of those involved in the care for people with cardiovascular disease can be different to those of other health care professionals. As such the organization you choose to work with, or your own team or reviewers, should have strong input from relevant groups with appropriate expertise that can provide you with peer review and team members in order to achieve your review. Finally, one of your major goals will be to publish your findings, so look for an organization that will help you publish and make your review accessible to the public and professionals all over the world – perhaps without expensive journal registration fees. As part of the publishing process, you should expect peer review, copy editor support and the database or journal to be rated as of international standard. A summary of what support a nonexhaustive selection of organizations can provide for your review is given in Table 2. More information on each can be found at

- Cochrane Collaboration: http://www.cochrane.org/

- Campbell Collaboration: http://www.campbellcollaboration.org/

- Joanna Briggs Institute: http://www.joannabriggs.edu.au/

- Eppi centre: http://eppi.ioe.ac.uk

In addition, there are data repositories that provide support for registering your review protocol and systematic review data, such as PROSPERO and the Systematic Review Data Repository (SRDR); these are easy to use web-based tools that extract and store information. They serve as a public repository of data and have a searchable archive of key questions addressed by systematic reviews. More information can be found at: Systematic Review Data repository: http://srdr.ahrq.gov/; PROSPERO: http://www.crd.york.ac.uk/prospero/. In addition, many journals also publish systematic reviews, and some journals will additionally publish systematic review protocols, there are even now dedicated journals for systematic reviews (http://www.systematicreviewsjournal.com/).

.jpg)

Steps of Doing a Systematic Review Including Writing a Suitable Protocol

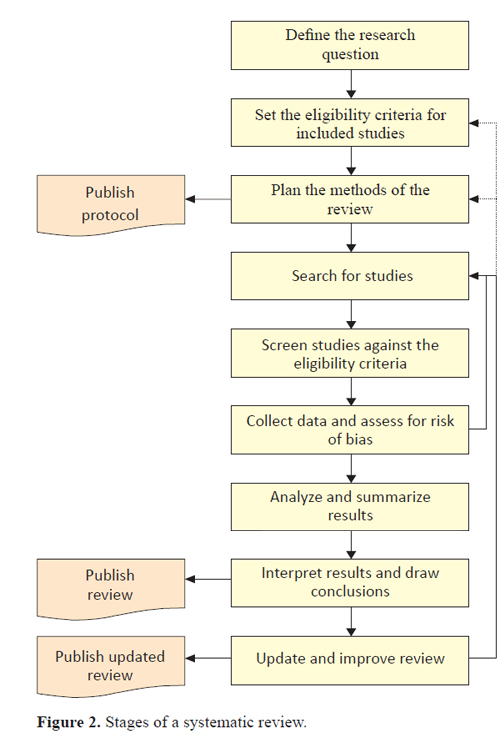

Whatever your choice of systematic review support organization, the methods of your review will follow commonly agreed steps as outlined in Figure 2.

For a systematic review to be deemed “systematic,” it needs to follow a set protocol, in order to be replicable, transparent, and (as much as possible) free from bias. The first stage of any systematic review should be to define the research question. Commonly for systematic reviews of the effectiveness of treatments, the question will follow a “PICOS” format (to define the Population, Intervention(s), Comparison(s), Outcomes and Study designs of interest to the review). These elements of the research question will be further set out through the eligibility criteria for the review. A protocol will be required to set out the types of research studies to be included in the review, and anything that does not meet these criteria will be excluded, based on the prespecified research question and a sound rationale. Protocols will also set out how studies will be searched for, and how (and which) data will be collected, analyzed and combined. It is a good practice to publish systematic review protocols to enhance the transparency of the process and avoid duplication. This can be done either through conducting the review via an organization such as the Cochrane Collaboration, or Campbell Collaboration, by registering the protocol in a dedicated database such as PROSPERO (see link above), or through publishing with a journal.

Having established the protocol, the next step is to search relevant electronic databases, reference lists and other sources as determined by the search strategy, to seek studies meeting the eligibility criteria. This would typically involve searching a range of relevant bibliographic databases using appropriate search terms, the reference lists of included studies and for grey literature (unpublished studies). The most comprehensive systematic reviews will also search for studies published in languages other than English, although this can be time- and cost-prohibitive. Omitting studies not published in English can introduce bias as can searching only for those published in academic journals (6). Note that even though all studies may appear to be retrieved, there are many studies which are not published due to nonsignificant results. Results of the search are screened against the eligibility criteria, typically by at least two independent people to avoid errors of judgment. Search results may be filtered by screening titles first, then abstracts of relevant-looking titles and finally the full reports. An audit trail is kept of the number of studies screened and excluded at each stage, and reasons for excluding studies which appeared relevant. You may find that systematic review software will help you to manage this stage (see Ref. 7 for further guidance on choosing appropriate software). It is not unusual to exclude large numbers of studies retrieved from the original search once the inclusion and exclusion criteria are fully considered.

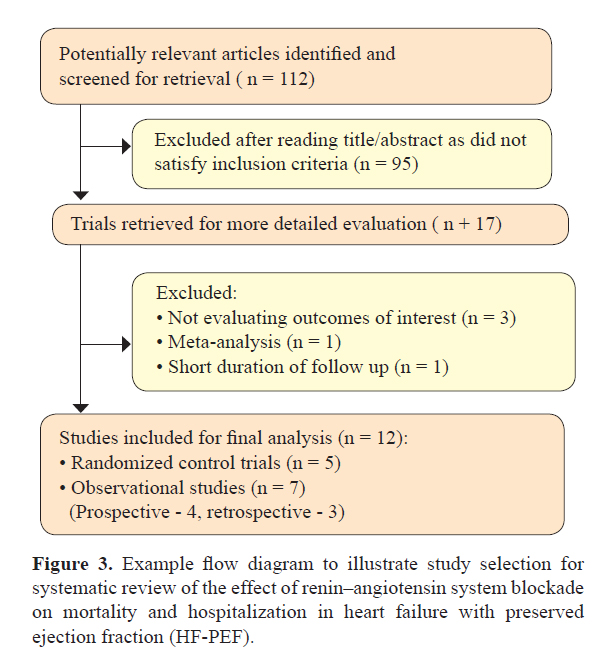

This can be appreciated in the illustrated example below where the effect of renin–angiotensin system blockade on mortality and hospitalization in heart failure with preserved ejection fraction (HF-PEF) is studied (8). Here, a systematic search of electronic databases (MEDLINE, PUBMED, EMBASE, and the Cochrane Library for Central Register of Clinical Trials) using the MESH terms – ‘‘heart failure, diastolic,”, ‘‘angiotensin-converting enzyme inhibitors’’ (ACE-I), ‘‘angiotensin receptor antagonists” (ARB) and the names individual ACE(I)/ ARBs – was performed. The search was limited to studies in human subjects and English language in peer-reviewed journals from 1966 to June 2011. A manual search for relevant clinical studies from references of the screened articles was additionally carried out. The authors defined their eligibility criteria to be (a) prospective (randomized or nonrandomized) or retrospective study designs assessing the effectiveness of rennin–angiotensin system inhibitors (ACE-I or ARB) for HF-PEF (defined as signs or symptoms of heart failure and EF >40 %); (b) studies reporting outcomes of interest, including mortality (all-cause and/or cardiac) and hospitalizations due to heart failure; and (c) studies with at least a 6-month follow-up in each study arm. Exclusion criteria were (a) healthy persons used as controls, (b) patients following heart transplantation, (c) absence of quantitative description of end points, (d) lack of clear and reproducible results, and (e) trials in the abstract form without a published manuscript in a peer-reviewed journal. Studies that had duplicated data, including the same group of patients or for whom there were updated results available, were excluded. Figure 3 illustrates this process, and is an example of application of the PRISMA (preferred reporting items for systematic reviews and meta-analyses) checklist (http://www.prisma-statement.org/statement.htm).

Hence for final analysis, only about 10% of the original selection of articles made it through the entire screening process. Data extraction is also often independently performed by two authors with disagreements being solved by arbitration and discussion. It is crucial to predefine criteria for study selection and data analysis to ensure transparency and reproducibility while generating an effective and meaningful systematic review.

Next, data is collected from studies meeting the review criteria, to include information on PICOS characteristics, outcome data and risk of bias assessments. Risk of bias assessment should be done, when possible, using a predetermined, validated tool (or equivalent for qualitative studies if included) (9). Bias is a systematic deviation from the truth; it may overestimate or underestimate the true effect, and it may be large or small. Bias can be minimized during the conduct of studies (e.g., through randomizing participants, allocation concealment, blinding patients, researchers and healthcare personnel, withdrawals and drop-outs, reporting of outcomes, etc.), and these are tangible things that can be assessed in a systematic review to form judgments about the risk of bias, and how believable the results of the studies are. As with screening, the independent assessment by two review authors is a method to reduce bias in this process. If studies included in a review are at a high risk of bias, one may place less confidence in their findings. The data are then summarized through narrative and tables and, where appropriate, statistical tests to combine included studies (meta-analyses) may be undertaken to provide statistical summaries of the study results.

Where there is a high degree of heterogeneity (variability between studies, either in types of participants, interventions or outcomes, or study design, risk of bias or results), it may not be appropriate to combine their results using meta-analysis. This is sometimes referred to as being like combining apples and oranges (10). If there is reason to believe that the intervention would work differently in subgroups of the sample (e.g., populations at high or low risk), then subgroup analyses should also be conducted. Meta-analyses are typically presented in forest plots (see the next section for how to interpret these). Where meta-analysis is not appropriate, the results may be presented using tables and text. Prior to completing the review, it would be advisable to consider rerunning the search to check for any recently published studies that the first search may have missed.

The interpretation of data and conclusions drawn should be grounded in the risk of bias of the included studies, so as to reflect the believability of the findings, as well as the direction and precision of results relating to the benefits and harms of the interventions assessed. All this information should then be written up in an accessible format to help inform practitioners, patients, policy-makers, and others who may be interested in the review findings. It is a good practice to then keep your review up-to-date and consider republishing it every few years depending on the speed of progress in the field. It is possible at this stage that you need to go back to your protocol and reconsider if the methods are still fit-for-purpose (Fig. 2).

Reading and Critically Appraising Systematic Reviews

Where there is a high degree of heterogeneity (variability between studies, either in types of participants, interventions or outcomes, or study design, risk of bias or results), it may not be appropriate to combine their results using meta-analysis. This is sometimes referred to as being like combining apples and oranges (10). If there is reason to believe that the intervention would work differently in subgroups of the sample (e.g., populations at high or low risk), then subgroup analyses should also be conducted. Meta-analyses are typically presented in forest plots (see the next section for how to interpret these). Where meta-analysis is not appropriate, the results may be presented using tables and text. Prior to completing the review, it would be advisable to consider rerunning the search to check for any recently published studies that the first search may have missed.

The interpretation of data and conclusions drawn should be grounded in the risk of bias of the included studies, so as to reflect the believability of the findings, as well as the direction and precision of results relating to the benefits and harms of the interventions assessed. All this information should then be written up in an accessible format to help inform practitioners, patients, policy-makers, and others who may be interested in the review findings. It is a good practice to then keep your review up-to-date and consider republishing it every few years depending on the speed of progress in the field. It is possible at this stage that you need to go back to your protocol and reconsider if the methods are still fit-for-purpose (Fig. 2).

Reading and Critically Appraising Systematic Reviews

Why is it important to critically appraise systematic reviews?

If there are existing systematic reviews that relate to your clinical question, you may be able to apply the findings of an existing review to your current practice rather than conduct a new review. Systematic reviews are currently considered as one of the highest forms of research evidence and it can be tempting to view a systematic review as providing “the definitive answer” to a clinical question. Those conducted under the auspices of the Cochrane Collaboration are held in particularly high regard (7) but on a general note, there are a number of reasons as to why caution is required in the interpretation and application of the findings of a systematic review.

Firstly, the quality of systematic reviews is variable (11). If a systematic review has not been well conducted, there would be concern regarding the validity of its findings. Secondly, even if a systematic review has been well-conducted it might have identified significant limitations or gaps in the current evidence base, and rightly recommend that its findings are interpreted with caution (although this is a good rationale for conducting some new research!). Similarly, a well-conducted systematic review might not have been recently updated and its findings might not therefore be based on current primary research. The inclusion of more recent studies has the potential to change the findings of the systematic review (12). A well-conducted systematic review might (for a number of reasons) have only included studies on participants very dissimilar to the patient(s) the clinician has in mind. Should this be the case, there may be reasons to suspect that the findings are not applicable to the patient(s) in question. Hence, wherever a systematic review has been published, it is important to critically appraise it before using it to inform practice.

There are two key questions you should ask when you are considering the implications of the findings of a systematic review for your practice. The first is “are the participants, intervention and outcome relevant to my clinical question?” It may be that the inclusion criteria of the systematic review do not cover the types of participants, interventions and/or outcomes in which you are interested. For example, the participants may not be similar to your patients or the setting may differ from that in which you work (e.g., community versus acute care), the intervention may not be one in which you are interested (perhaps it is not feasible to implement in your setting) and the outcomes may not be relevant (e.g., you may be interested in mortality but the review has considered only anxiety). It may also be, however, that the inclusion criteria do cover these but no relevant studies have yet been conducted.

The second question is “what do the results mean for practice?” You should consider whether the intervention was more effective than the control and if so, examine the effect size – is the effect meaningful in clinical practice? Does the size of the effect outweigh the cost to implement the intervention? Also look at the confidence intervals (CIs) – if the true effect was at the upper or lower bound, would this change your decision about whether to implement the intervention? It is important to interpret these for yourself so that you can check whether the conclusions of the systematic review accurately reflect the findings. A further consideration is the type of studies on which the results have been based. For example, you might place more confidence in results that are based on randomized controlled trials at a low risk of bias than those at a high risk of bias or other study designs such as controlled before and after studies. It is worth checking that, based on issues around risk of bias, you agree with the conclusions made by the systematic review authors. The GRADE approach provides guidelines for making recommendations based on research evidence, which you may find help you to consider how to interpret the findings of systematic reviews (see http://cebgrade.mcmaster.ca and http://www.gradeworkinggroup.org/guidelines/index.htm for further guidance).

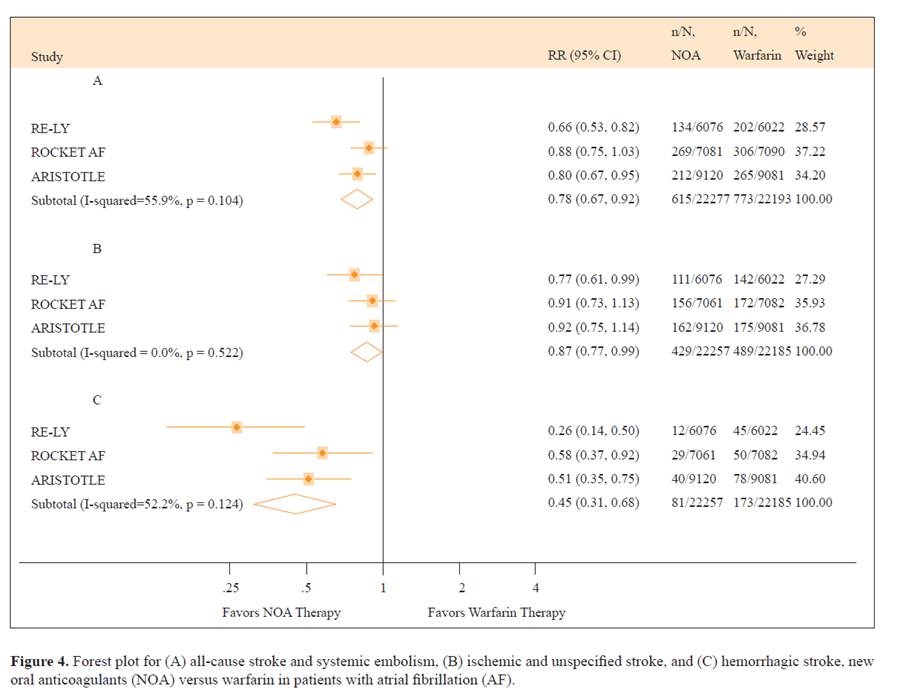

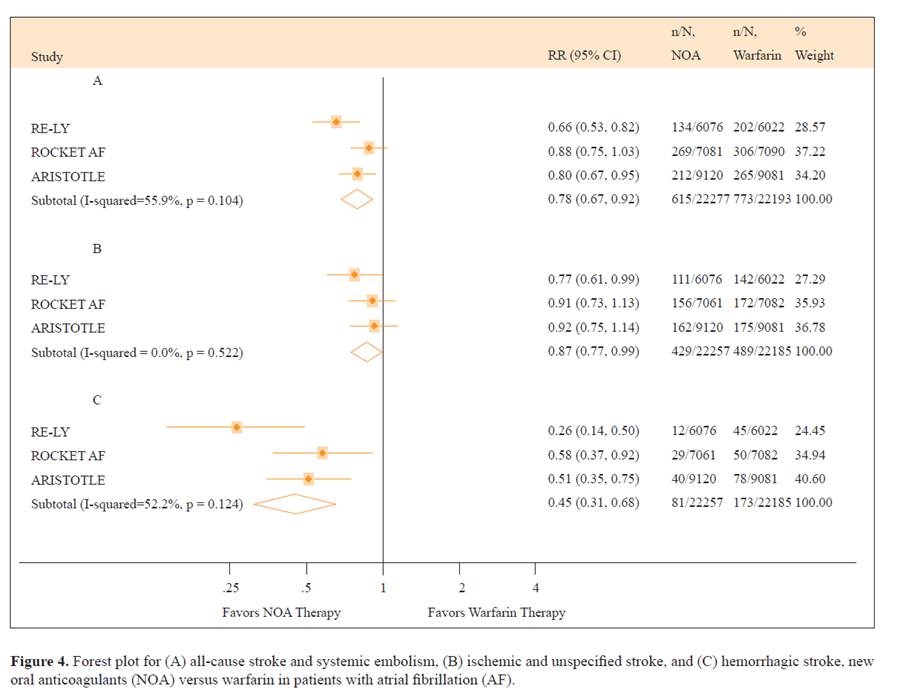

The example in Figure 4 illustrates the forest plot from a systematic review by Miller et al. looking at the efficacy and safety of newer anticoagulants versus warfarin for atrial fibrillation (14). The outcome measured here is all-cause stroke and systemic embolism, ischemic and unspecified stroke and hemorrhagic stroke. The rows of the forest plot represent the individual studies included for that comparison and outcome. On the left of the forest plot you will find a list of these included studies (commonly given a study ID consisting of the first author’s name and the year in which the study was published). The sample size for the control and intervention groups may also be given, as will either the mean scores (with standard deviations) for continuous outcomes such as blood pressure or the number of people with the outcome (e.g., number of people who had a cardiac arrest). The number of events may also be used (e.g., number of cardiac arrests) and can be summarized using a rate ratio (for rare events), or mean difference (for common events). The information at the bottom of the forest plot relates to the data that has been pooled from all the included studies. There is generally a total sample size for control and intervention groups.

The line that runs up the middle of the plot is called “the line of no effect.” For all effects represented by “ratios” (e.g., odds, risk, or hazards ratios) the line of no effect is placed at 1 on the horizontal axis. For all effects represented by absolute differences (e.g., mean differences), it is placed at 0. The square blocks represent the point estimate of the effect for each study (larger blocks indicate that the study was given more weight in the analysis, for example, because it had a larger sample size), and the horizontal lines the CIs. In a meta-analysis, studies with narrower CIs are given more weight in the analysis, and those with wider CIs are given relatively less weight; this is referred to as weighting according to the inverse of the variance. For ease of interpretation, the numerical data are also written in a table beside the forest plot. If you look at the diamond at the bottom of the plot, the middle of the diamond is the point estimate of the effect size and the widest points of the diamond represent the CIs:

The point estimate indicates the size of the effect (the further from the line, the greater the effect) and the CIs the range in which the true effect size might actually be. Hence, narrower CIs allow us to be more confident that the estimated effect size is close to the true effect size. It is important to consider, however, that even if the CIs do not cross the line of no effect, they may still only represent a clinically trivial increase or decrease in the outcome. For full interpretation of data, there are a whole raft of factors that should be considered together (not simply the statistical answer to your question); these issues are incorporated into the GRADE approach (Grading of Recommendations Assessment, Development and Evaluation) for assessing the evidence (15).

Taking into account the interpretation above, the forest plot in Figure 4 concludes that the new oral anticoagulants reduced the risk for a composite end point of stroke and systemic embolism compared to warfarin and were also associated with a lower risk for key secondary efficacy outcomes (ischemic and unidentified stroke, hemorrhagic stroke, all-cause mortality, and vascular mortality) compared to warfarin. It should be noted, however, that the upper CI for ischemic and unidentified stroke is very close to the line of no effect (0.99). When making decisions related to practice, it is important to consider that should the true effect lie at this point, then this would mean that newer anticoagulants only very marginally reduce the risk for a composite end point of stroke and systemic embolism as compared with warfarin.

Summary

In summary, systematic reviews (if well-conducted) can be an invaluable resource for providing an up-to-date and systematic summary of the current evidence for particular interventions. Overviews of systematic reviews are also useful because they provide summaries of groups of related systematic reviews (e.g., summarizing the evidence of the effectiveness of a number of different interventions for a certain outcome or disease). These can be particularly useful for informing policy decisions about which interventions are most effective. If a clinically important question has not yet been addressed by a systematic review, you might wish to consider undertaking one yourself. While systematic reviews can be challenging and time-consuming to conduct, they can also be extremely rewarding. Interacting with experts in the field will ensure the best approach and will help foster new collaborations in your area. Systematic reviews present the ideal opportunity to undertake in-depth assessments of evidence relevant to your practice and have the potential to influence national guidelines in your area. Additionally they represent a chance to publish without (or before) undertaking primary research.

Acknowledgments

The authors would like to thank Dr. Rebecca Stores and Dr. Chris Markham for their support and useful comments on the manuscript.

Firstly, the quality of systematic reviews is variable (11). If a systematic review has not been well conducted, there would be concern regarding the validity of its findings. Secondly, even if a systematic review has been well-conducted it might have identified significant limitations or gaps in the current evidence base, and rightly recommend that its findings are interpreted with caution (although this is a good rationale for conducting some new research!). Similarly, a well-conducted systematic review might not have been recently updated and its findings might not therefore be based on current primary research. The inclusion of more recent studies has the potential to change the findings of the systematic review (12). A well-conducted systematic review might (for a number of reasons) have only included studies on participants very dissimilar to the patient(s) the clinician has in mind. Should this be the case, there may be reasons to suspect that the findings are not applicable to the patient(s) in question. Hence, wherever a systematic review has been published, it is important to critically appraise it before using it to inform practice.

What to consider when critically appraising a systematic review

When critically appraising a systematic review, it is important to bear in mind the points made above about what steps should be followed in order to conduct a good systematic review. In addition, we would recommend that you use a structured critical appraisal checklist to help you appraise systematic reviews; for example, those published by the Critical Appraisal Skills Programme (www.casp-uk.net) or the AMSTAR questionnaire (13) are useful tools in the appraisal of systematic reviews. General types of questions often considered by such checklists include the following:

- Is the review question focused and clear?

- Was the search comprehensive?

- Were studies selected in an unbiased manner?

- Was the risk of bias of included studies assessed?

- Were the data analyzed appropriately?

- Were the results presented appropriately?

- How, and should, I apply the findings of the systematic review to my practice?

There are two key questions you should ask when you are considering the implications of the findings of a systematic review for your practice. The first is “are the participants, intervention and outcome relevant to my clinical question?” It may be that the inclusion criteria of the systematic review do not cover the types of participants, interventions and/or outcomes in which you are interested. For example, the participants may not be similar to your patients or the setting may differ from that in which you work (e.g., community versus acute care), the intervention may not be one in which you are interested (perhaps it is not feasible to implement in your setting) and the outcomes may not be relevant (e.g., you may be interested in mortality but the review has considered only anxiety). It may also be, however, that the inclusion criteria do cover these but no relevant studies have yet been conducted.

The second question is “what do the results mean for practice?” You should consider whether the intervention was more effective than the control and if so, examine the effect size – is the effect meaningful in clinical practice? Does the size of the effect outweigh the cost to implement the intervention? Also look at the confidence intervals (CIs) – if the true effect was at the upper or lower bound, would this change your decision about whether to implement the intervention? It is important to interpret these for yourself so that you can check whether the conclusions of the systematic review accurately reflect the findings. A further consideration is the type of studies on which the results have been based. For example, you might place more confidence in results that are based on randomized controlled trials at a low risk of bias than those at a high risk of bias or other study designs such as controlled before and after studies. It is worth checking that, based on issues around risk of bias, you agree with the conclusions made by the systematic review authors. The GRADE approach provides guidelines for making recommendations based on research evidence, which you may find help you to consider how to interpret the findings of systematic reviews (see http://cebgrade.mcmaster.ca and http://www.gradeworkinggroup.org/guidelines/index.htm for further guidance).

Interpreting forest plots

Although not all systematic reviews conduct meta-analyses, many do and present their findings in the form of forest plots. These present, for each comparison and outcome, both the study effects (and CIs) derived from individual studies and a pooled effect of all the studies. Forest plots may look complicated but do not be put off, because they are actually relatively simple to understand. The example in Figure 4 illustrates the forest plot from a systematic review by Miller et al. looking at the efficacy and safety of newer anticoagulants versus warfarin for atrial fibrillation (14). The outcome measured here is all-cause stroke and systemic embolism, ischemic and unspecified stroke and hemorrhagic stroke. The rows of the forest plot represent the individual studies included for that comparison and outcome. On the left of the forest plot you will find a list of these included studies (commonly given a study ID consisting of the first author’s name and the year in which the study was published). The sample size for the control and intervention groups may also be given, as will either the mean scores (with standard deviations) for continuous outcomes such as blood pressure or the number of people with the outcome (e.g., number of people who had a cardiac arrest). The number of events may also be used (e.g., number of cardiac arrests) and can be summarized using a rate ratio (for rare events), or mean difference (for common events). The information at the bottom of the forest plot relates to the data that has been pooled from all the included studies. There is generally a total sample size for control and intervention groups.

The line that runs up the middle of the plot is called “the line of no effect.” For all effects represented by “ratios” (e.g., odds, risk, or hazards ratios) the line of no effect is placed at 1 on the horizontal axis. For all effects represented by absolute differences (e.g., mean differences), it is placed at 0. The square blocks represent the point estimate of the effect for each study (larger blocks indicate that the study was given more weight in the analysis, for example, because it had a larger sample size), and the horizontal lines the CIs. In a meta-analysis, studies with narrower CIs are given more weight in the analysis, and those with wider CIs are given relatively less weight; this is referred to as weighting according to the inverse of the variance. For ease of interpretation, the numerical data are also written in a table beside the forest plot. If you look at the diamond at the bottom of the plot, the middle of the diamond is the point estimate of the effect size and the widest points of the diamond represent the CIs:

- If the CIs cross the line of no effect, then the current evidence indicates that the intervention is neither more nor less effective than the control (it is possible that this estimate may change if new evidence is incorporated).

- If the diamond is to the right of the line, and the CIs do not cross the line of no effect, then the intervention is indicated to increase the outcome of interest (the evidence will favor the control if the outcome is undesirable).

- Conversely, if the diamond is to the left of the line, and the CIs do not cross the line of no effect, then the intervention has decreased the outcome of interest (the evidence will favor the intervention if the outcome is undesirable).

The point estimate indicates the size of the effect (the further from the line, the greater the effect) and the CIs the range in which the true effect size might actually be. Hence, narrower CIs allow us to be more confident that the estimated effect size is close to the true effect size. It is important to consider, however, that even if the CIs do not cross the line of no effect, they may still only represent a clinically trivial increase or decrease in the outcome. For full interpretation of data, there are a whole raft of factors that should be considered together (not simply the statistical answer to your question); these issues are incorporated into the GRADE approach (Grading of Recommendations Assessment, Development and Evaluation) for assessing the evidence (15).

Taking into account the interpretation above, the forest plot in Figure 4 concludes that the new oral anticoagulants reduced the risk for a composite end point of stroke and systemic embolism compared to warfarin and were also associated with a lower risk for key secondary efficacy outcomes (ischemic and unidentified stroke, hemorrhagic stroke, all-cause mortality, and vascular mortality) compared to warfarin. It should be noted, however, that the upper CI for ischemic and unidentified stroke is very close to the line of no effect (0.99). When making decisions related to practice, it is important to consider that should the true effect lie at this point, then this would mean that newer anticoagulants only very marginally reduce the risk for a composite end point of stroke and systemic embolism as compared with warfarin.

Summary

In summary, systematic reviews (if well-conducted) can be an invaluable resource for providing an up-to-date and systematic summary of the current evidence for particular interventions. Overviews of systematic reviews are also useful because they provide summaries of groups of related systematic reviews (e.g., summarizing the evidence of the effectiveness of a number of different interventions for a certain outcome or disease). These can be particularly useful for informing policy decisions about which interventions are most effective. If a clinically important question has not yet been addressed by a systematic review, you might wish to consider undertaking one yourself. While systematic reviews can be challenging and time-consuming to conduct, they can also be extremely rewarding. Interacting with experts in the field will ensure the best approach and will help foster new collaborations in your area. Systematic reviews present the ideal opportunity to undertake in-depth assessments of evidence relevant to your practice and have the potential to influence national guidelines in your area. Additionally they represent a chance to publish without (or before) undertaking primary research.

Acknowledgments

The authors would like to thank Dr. Rebecca Stores and Dr. Chris Markham for their support and useful comments on the manuscript.

Conflict of Interest

None

References

References

- Agarwal V, Briasoulis A, Messerli FH; Effects of renin-angiotensin system blockade on mortality and hospitalization in heart failure with preserved ejection fraction; Heart Fail Rev. 2012 Jun 8. [Epub ahead of print]

- Brunton, J., & Thomas, J. (2012). Information management in reviews. In D. Gough, S. Oliver & J. Thomas (Eds.), An Introduction to Systematic Reviews (pp. 83-106). London: Sage.

- Chan RJ, Wong A. Two decades of exceptional achievements: Does the evidence support nurses to favour Cochrane systematic reviews over other systematic reviews? Int J Nurs Stud. Jul 2012;49(7):773-774.

- Choi PT, Halpern SH, Malik N, Jadad AR, Tramer MR, Walder B. Examining the evidence in anesthesia literature: a critical appraisal of systematic reviews. Anesth Analg. Mar 2001;92(3):700-709.

- Deeks J, Higgins J, Altman DG. Analysing data and undertaking meta-analyses. In: Higgins J, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]: The Cochrane Collaboration; 2011.

- Higgins J, Deeks J. Selecting studies and collecting data. In: Higgins J, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]: The Cochrane Collaboration; 2011.

- Higgins J, Altman DG, Sterne J. Assessing risk of bias in included studies. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]: The Cochrane Collaboration; 2011.

- Guyatt G, Oxman AD, Akl EA, Kunz R, Vist G, Brozek J, et al. GRADE guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables; J Clin. Epidemiol. 2011; 64(4): 383-94. DOI: 10.1016/j.jclinepi.2010.04.026

- Jadad AR, Moore RA, Carroll D, Jenkinson C, Reynolds DJ, Gavaghan DJ, McQuay HJ (1996) Assessing the quality of reports of randomized clinical trials: is blinding necessary? Control Clin Trials 17(1):1–12

- Khan KS, ter Riet G, Glanville J, Sowden AJ and Kleijnen J NHS Centre for Reviews and Dissemination , (Ed.) (2001) Undertaking Systematic Review of Research on Effectiveness. CRD’s Guidance for those Carrying out or Commissioning Reviews. York: University of York.

- Lefebvre C, Manheimer E, Glanville J. Searching for studies. In: Higgins J, Green S, eds.Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]: The Cochrane Collaboration; 2011.

- Miller CS, Grandi SM, Shimony A, Filion KB, Eisenberg MJ; Meta-analysis of efficacy and safety of new oral anticoagulants (dabigatran, rivaroxaban, apixaban) versus warfarin in patients with atrial fibrillation; Am J Cardiol. 2012 Aug 1;110(3):453-60. Epub 2012 Apr 24.

- NHS Quality Improvement Scotland. Clinical Governance and Risk Management: Achieving safe, effective patient-focused care and services. NHS QIS 2005. http://www.clinicalgovernance.scot.nhs.uk/documents/CGRM_CSF_Oct05.pdf

- Perry A and Hammond N (2002) Systematic Reviews: The experiences of a PhD Student. Psychology Learning and Teaching 2, 32-35.

- Rees K, Beranek-Stanley M, Burke M, Ebrahim S. Hypothermia to reduce neurological damage following coronary artery bypass surgery. Cochrane Database Syst Rev. 2001;(1):CD002138.

- Shea BJ, Grimshaw JM, Wells GA, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Medical Research Methodology. 2007;7:10.

- Shojania KG, Sampson M, Ansari MT, Ji J, Doucette S, Moher D. How Quickly Do Systematic Reviews Go Out of Date? A Survival Analysis. Annals of Internal Medicine. 2007;147(4):224-233.

- White A and Schmidt K (2005) Systematic literature reviews. Complementary Therapies in Medicine 13, 54-60.

- Why Publish with JCPC?

- Instructions to the Authors

- Submit Manuscript

- Advertise with Us

- Journal Scientific Committee

- Editorial Policy

Print: ISSN: 2250-3528